Citation: Baresi, L., Hu, D. Y. X., Stocco, A., & Tonella, P. (2025). Efficient Domain Augmentation for Autonomous Driving Testing Using Diffusion Models. arXiv preprint arXiv:2409.13661v3 [cs.SE]. To appear in ICSE 2025.

1. Paper Overview

This paper integrates pre-trained diffusion models with physics-based simulators to augment operational design domain (ODD) coverage for autonomous driving system (ADS) testing, achieving 20× more failure detections through real-time image-to-image translation validated by semantic segmentation while maintaining only 2% computational overhead via knowledge distillation to CycleGAN.

2. Background & Motivation

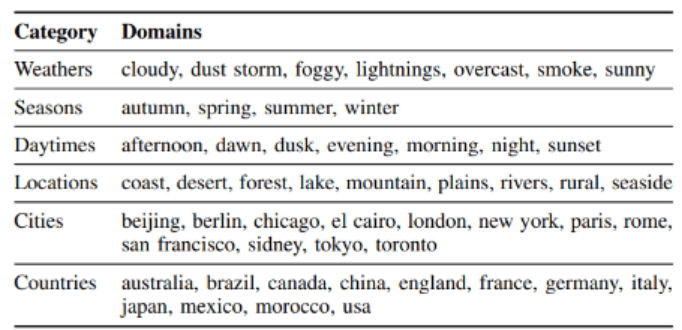

Existing simulation-based testing platform, such as Unity or Unreal suffers from limited ODD coverage. Simulators are often limited in the range of ODD they represent, as they primarily focus on photorealistic rendering and accurate physics representation. Hence, this caused the platform to fail to cover some ODD scenarios, which are critical for ADS testing. Traditional approaches to expanding ODD conditions demand extensive simulator engine redesign, domain expertise, and face a fidelity gap between virtual and real-world environments, while prior GenAI solutions like DeepRoad and TACTICS rely on training data corpora, target offline model-level testing of individual DNNs, and lack system-level validation. Neural simulators such as DriveGAN and GAIA-1 generate continuous driving streams but depend on learned physics models prone to inaccuracies (e.g., unrealistic collisions), making them unsuitable for precise failure detection. The core motivation is to combine GenAI’s diversity with physics-based simulation’s accuracy to expand testable ODD conditions (covering 52 domains across weather, seasons, daytime, and locations from 6 categories) without external datasets or major simulator modifications, enabling effective exposure of rare ADS failures that simulators alone cannot reveal.

3. Method

3.1 Problem Formulation

The framework intercepts camera-captured images from ADS in real-time, applies diffusion-based augmentation to transform backgrounds and environmental conditions while preserving foreground road semantics (lanes, geometry), validates augmented images via semantic segmentation, and feeds valid outputs to the ADS for driving command prediction, which the simulator then executes to close the testing loop. This approach focuses on label-preserving domains that maintain driving actions (excluding snow/rain due to friction changes) and targets system-level testing with metrics like out-of-bounds (OOB) incidents, collisions (C), failure track coverage (FTC, percentage of sectors with errors), relative cross-track error (RCTE, distance from lane center vs. nominal), and relative steering jerk (RSJ, steering smoothness).

3.2 Domain Augmentation Strategies

- Instruction-editing

- This takes image and editing instructions and produces output image with the editing instruction applied.

- Model used are InstructPix2Pix and SDEdit

- Inpainting

- This category employs a text-to-image diffusion model that performs inpainting. Instances of Inpainting models are Stable Diffusion, DALL-E, and Pixart-α.

- Inpainting with Refinement

- Adds a refinement step starting from partially-noised inpainted images, conditioned on edge maps from original images

3.3 Semantic Validation

An automated validator employing OC-TSS (One Class Targeted Semantic Segmentation) metric compares U-Net-predicted semantic masks of road, pedestrians, vehicles, traffic signs, and lights between original and augmented images, discarding augmentations below a conservative threshold of 0.9 to minimize invalid inclusions.

3.4 Knowledge Distillation

The final phase involves creating a fast and consistent neural rendering engine in the simulator, using outputs from the previous phases. The diffusion-based models, while effective for diversity, are computationally expensive and can produce potentially inconsistent augmentations, which may be problematic for the temporal coherence of a simulation.

4. Experiment

- RQ1 (semantic validity and realism): Do diffusion models generate images that are semantically valid and realistic? How is the semantic validator at detecting invalid augmentations?

- RQ2 (effectiveness): How effective are augmented images in exposing faulty system-level misbehaviors of ADS?

- RQ3 (efficiency): What is the overhead introduced by diffusion model techniques in simulation-based testing? Does the knowledge-distilled model speed up computation?

- RQ4 (generalizability): Does the approach generalize to complex urban scenarios and multi-modal ADS?

5. Setups

Experiments used Udacity simulator (single-camera lane-keeping, 40 sectors) with four ADS: DAVE-2, Chauffeur, Epoch (CNN-based), and ViT-based (Vision Transformer), testing 9 selected domains (3 in-distribution like sunny/summer/afternoon with VAE reconstruction error 0.067–0.074, 3 in-between like autumn/desert/winter, 3 out-of-distribution like dust storm/forest/night with error 0.078–0.218) against 3 baseline simulator domains. RQ4 extended to CARLA simulator (600×800 images, 3 cameras) with InterFuser ADS (multi-modal RGB+LiDAR) on Town05 urban scenarios (10 scenarios), augmenting 4 semantic classes (road, pedestrians, vehicles, signs, lights).

Results

- RQ1 (Semantic Validity & Realism): Human study validated 3% false positive rate (FP) for semantic validator, confirming automated accuracy; Inpainting with Refinement produced most realistic images (statistically significant via Mann-Whitney, small effect size 0.17 vs. Inpainting).

- RQ2 (Effectiveness): Augmented domains revealed 600 total failures across 4 ADS in Udacity (20× more than 3 simulator domains’ ~30 failures), with FTC reaching 87.5% (Epoch, out-of-distribution Instruction-editing) vs. 17.5% max for simulator. Instruction-editing exposed the most errors (e.g., 66 OOB + 8 C for DAVE-2 in out-of-distribution) due to higher domain distance (reconstruction error 0.218 vs. 0.082 for Inpainting with Refinement), though less realistic; CNN-based ADS showed lower RSJ (smoother steering) under augmentation, while ViT-based exhibited higher RSJ (3.40 vs. 1.22 baseline) due to global attention sensitivity to domain shifts. Retraining with augmented data reduced misbehaviors by 35.2% on average (up to 81.7% for foggy conditions). In CARLA, augmented domains exposed 25 red-light infractions and 3 vehicle collisions (zero in baseline), with route completion dropping from 84.26% to 69.70%.

- RQ3 (Efficiency): Diffusion models incurred 470% overhead (Inpainting with Refinement, 2172.6 ms/image, 74.1 min test run vs. 15.7 min baseline) but knowledge-distilled CycleGAN achieved 12.3 ms (Udacity) or 82 ms (CARLA), yielding 2% or 20% overhead respectively, making real-time testing practical.

- RQ4 (Generalizability): Successfully adapted to complex urban CARLA scenarios, validating multi-modal ADS (InterFuser) and exposing new failure modes unavailable in simulator.

Leave a comment